06 - Multi-Layer Perceptron-Networks

"Now we are talking about something that is not linear"

XOR: A Limitation of the Linear Model

- Linear model: just 2 layers: input layer and output layer

- One way to make this model not linear is to make it wider (still 2 layers)

- This is called kernel methods

- How to make it wider? you make the input vector to be larger?

- Usually we do not count the softmax layer

- The way to make it wider is to apply

- Now

is a wider vector - This model will be not linear in terms of

- Now

- How do we do transformations to a vector

? - We apply

transformations is a vector that makes the components be multiplied by some other components. - You end up increasing the dimension of

- We apply

- In training, you only need this: $$\phi^T(x_i)\phi(x_j) = (x_i^Tx_j)$$

- The pairwise inner product with

- The pairwise inner product with

- There is a more efficient way to do this, which is deep learning (making a model to be deep)

- Kernel methods = use pairwise inner product

- Can be computed very efficient

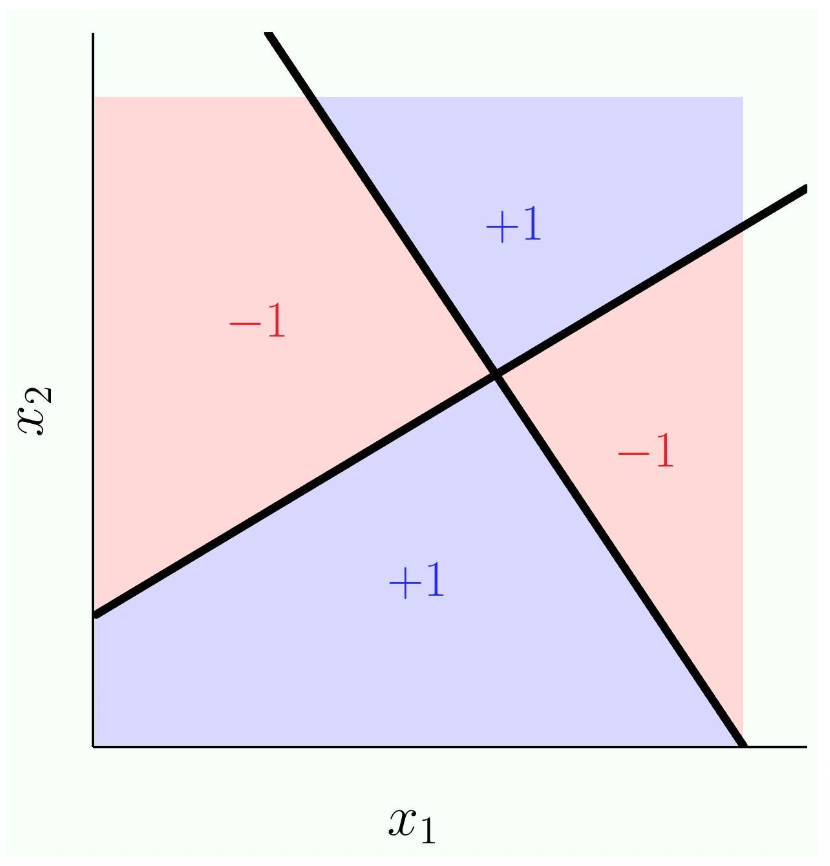

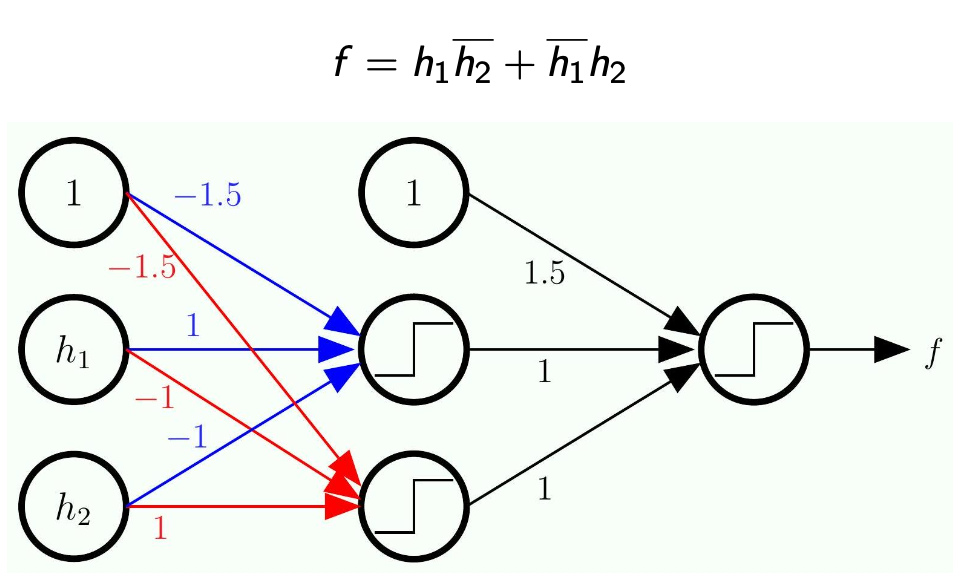

Decomposing XOR

- Exclusive OR:

- Basically means:

- If

and both give you positive or negative, the result is negative (-1). - They need to be different for XOR to output positive (+1)

- If

- So they key here is that we use linear models but we use multiple of them and we put them together

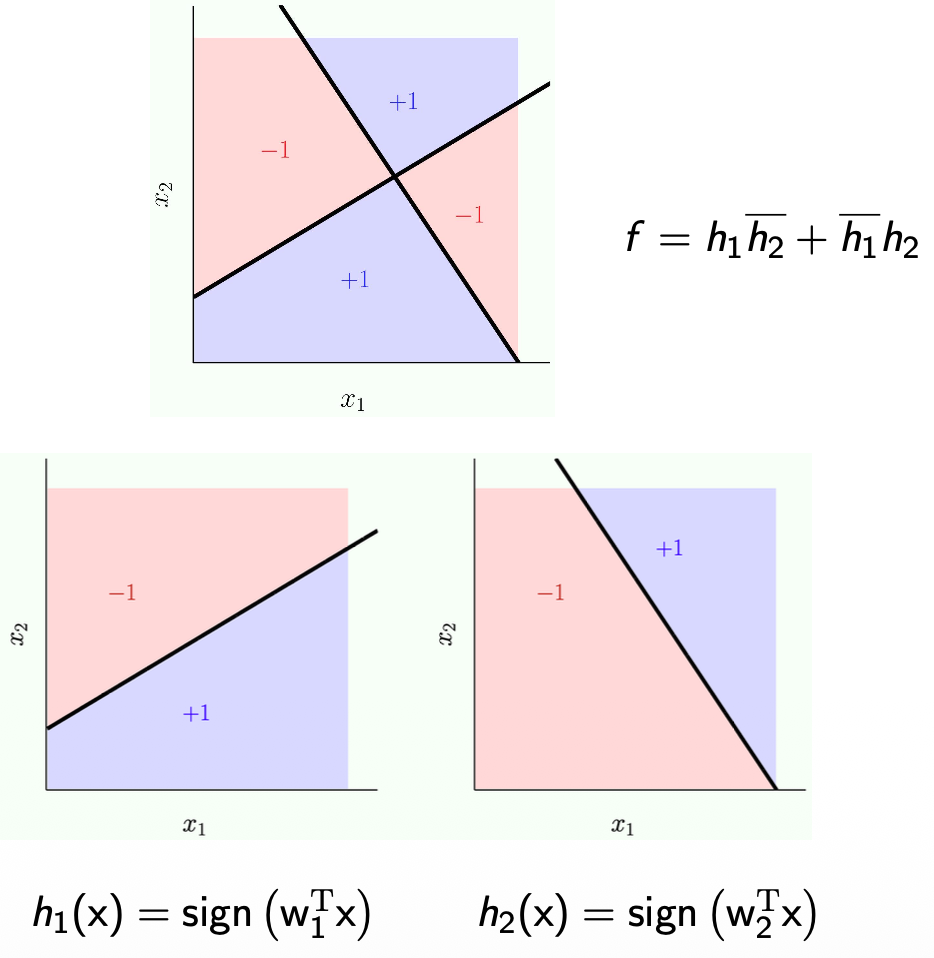

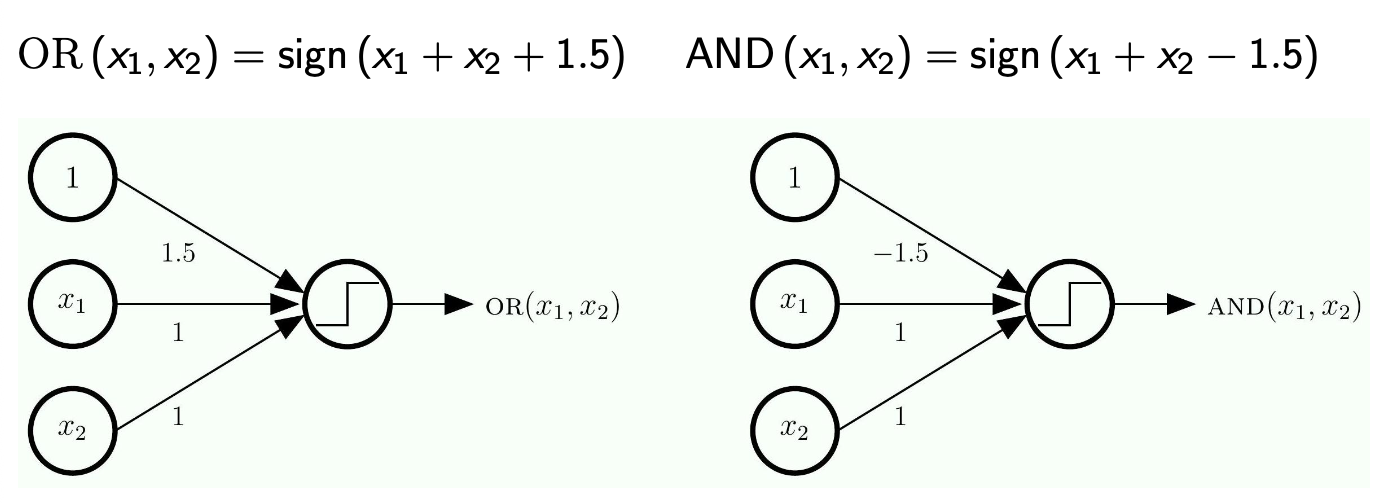

Perceptrons for OR and AND

- OR:

- If one or both of

and is positive, then OR is positive - Why +1.5?

- It is just needed to represent OR

- It will just represent the truth table.

- The input for

and is just +1 or -1

- If one or both of

- AND:

- If both

and are positive, then AND is positive

- If both

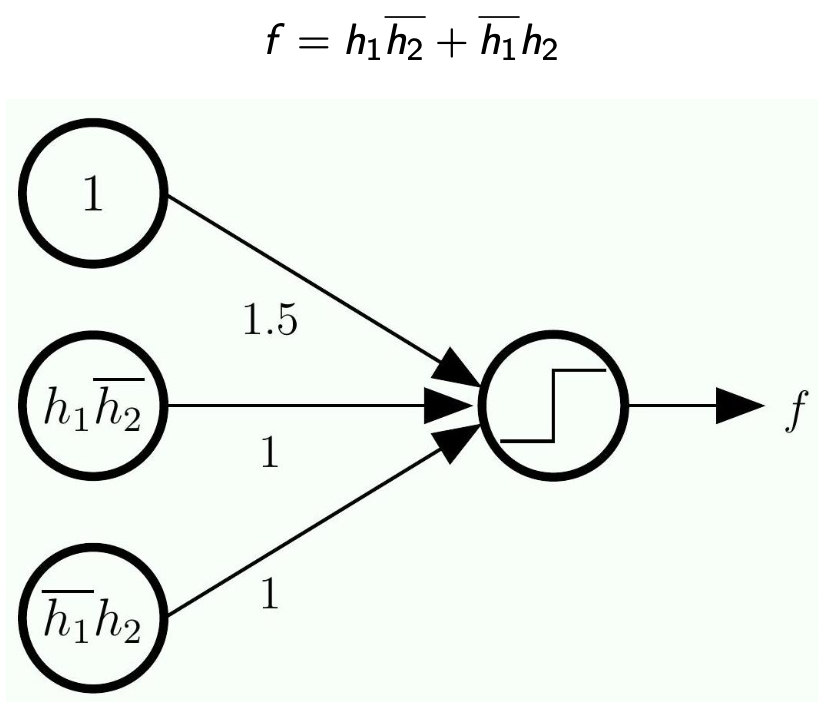

Representing

- Here we work backward because we are given

- We need to expand each of

- Here,

is the input and the output is

- The next step is to expand each of

- Note that each of

is a linear model of the input

- Note that each of

- The -1.5 will eliminate the term we added to implement OR/AND

- Note the bar on the top means

-

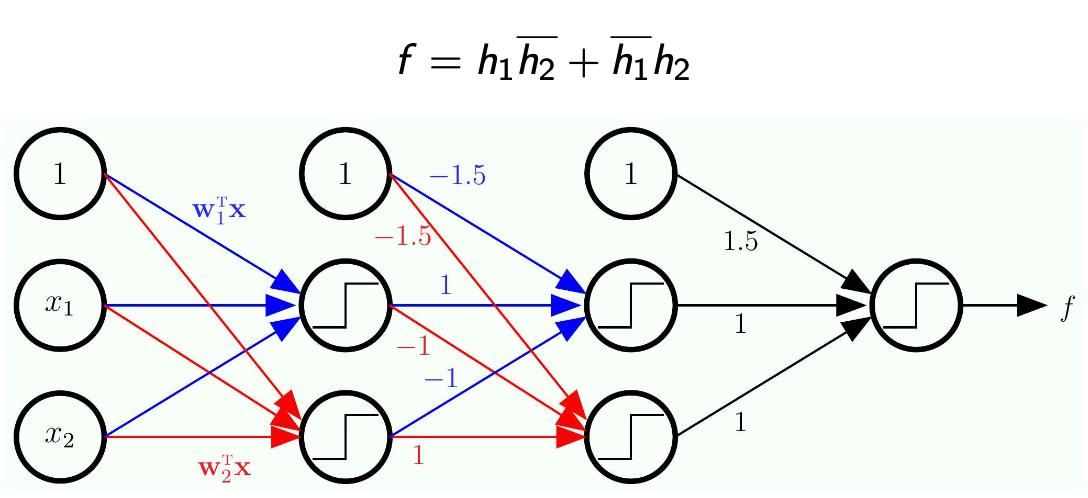

Now, since we know each of

is a linear model of the inputs and , then we decompose even more -

Note how this became a network with multiple layers!

-

Nothing is trained here

- This is just a completely hardwire network

- Solves problems that linear models cannot solve by their own

-

Will this method enable us to do more complex models? (something more complex than

) - Yes! you can always convert any logic function into this form

- As long as you can convert to this standard form you will be used to use a network just like this

- The logic form will just look longer

The Multilayer Perceptron (MLP)

- More layers allow us to implement

- These additional layers are called hidden layers

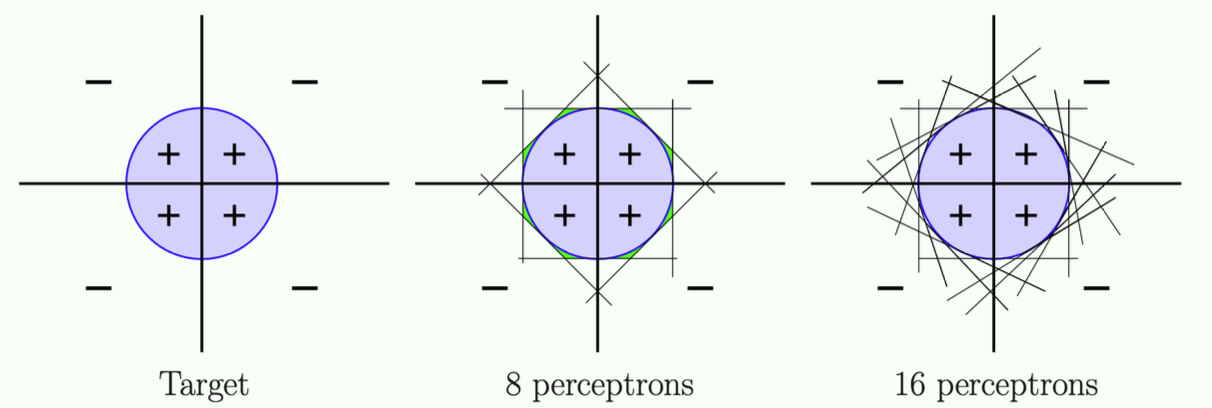

Universal Approximation

- Any target function

that can be decomposed into linear separators can be implemented by a 3 -layer MLP. - A sufficiently smooth separator can "essentially" be decomposed into linear separators.

Theory:

- More units/More linear models -> more accurate predictions

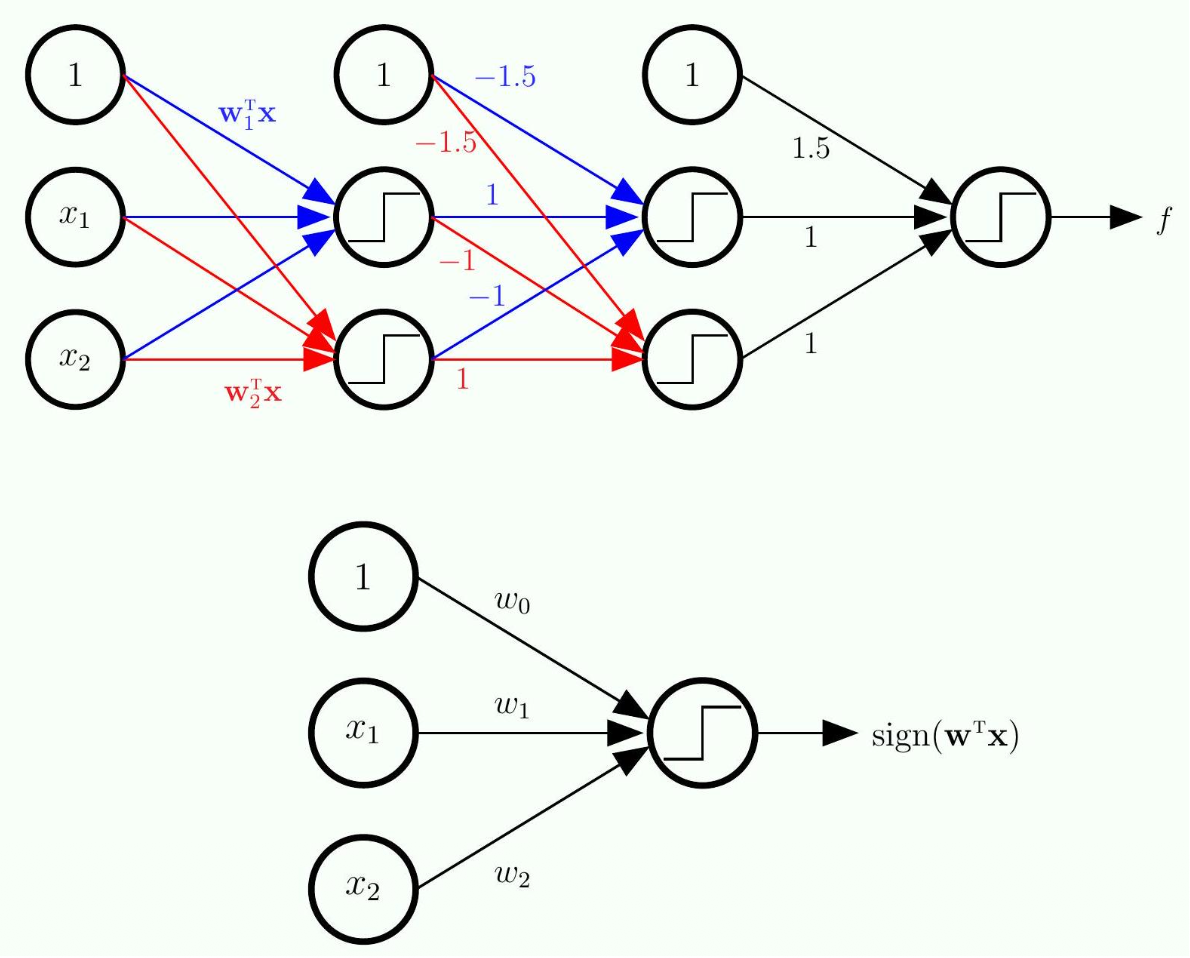

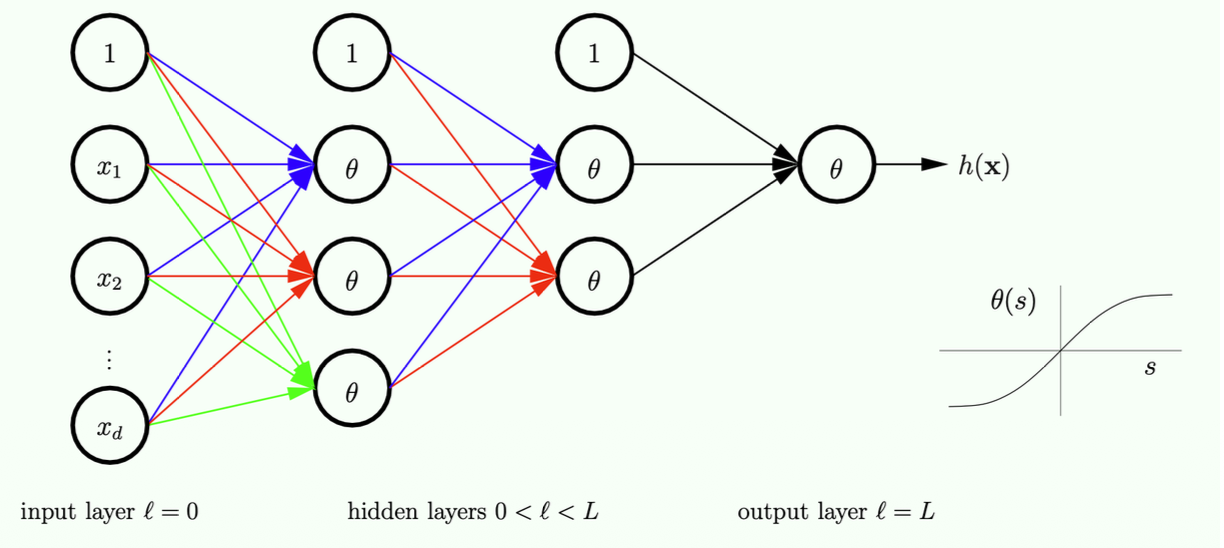

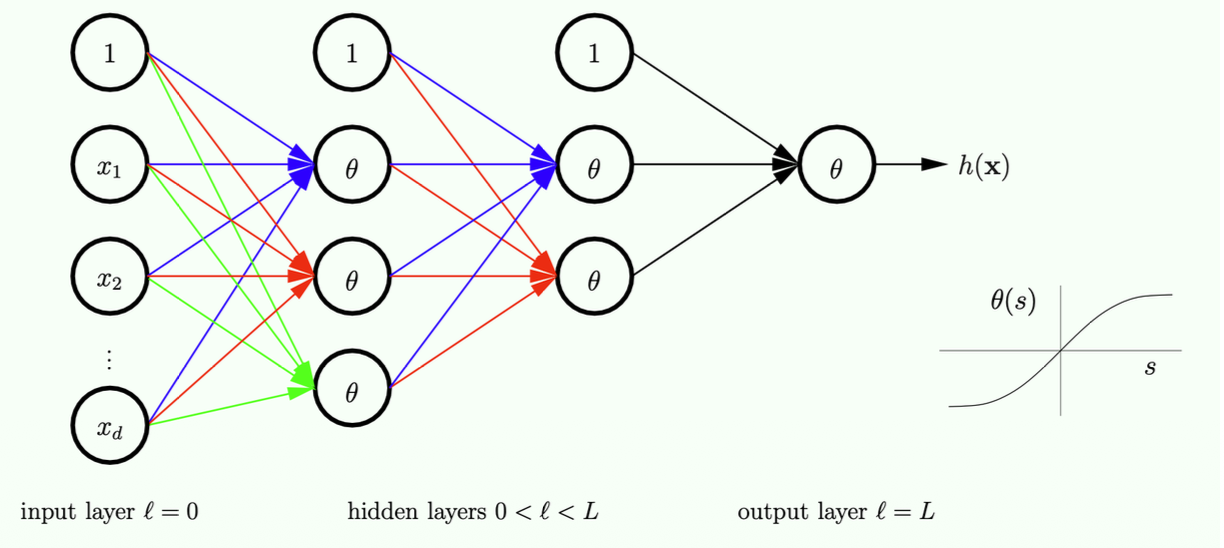

The Neural Network

is not smooth (due to sign function), so cannot use gradient descent. gradient descent to minimize .

needs to be differentiable - This function has been used for many years, until recently we changed this, but we will talk about this later

- Note: we use training data to determine all the weights here.

- The question is how do we obtain those connection weights?

- If I have this values on the left, how do we compute the values on the right (on the next layer)

- The "1" on each layer is essentially the threshold term

- That is why this unit will have no input, but will have an output of a fixed value

- Note this is not necessarily the most economical way

- If you use more layers, the total number of units might be smaller, that is why people usually do many many layers, even thousands

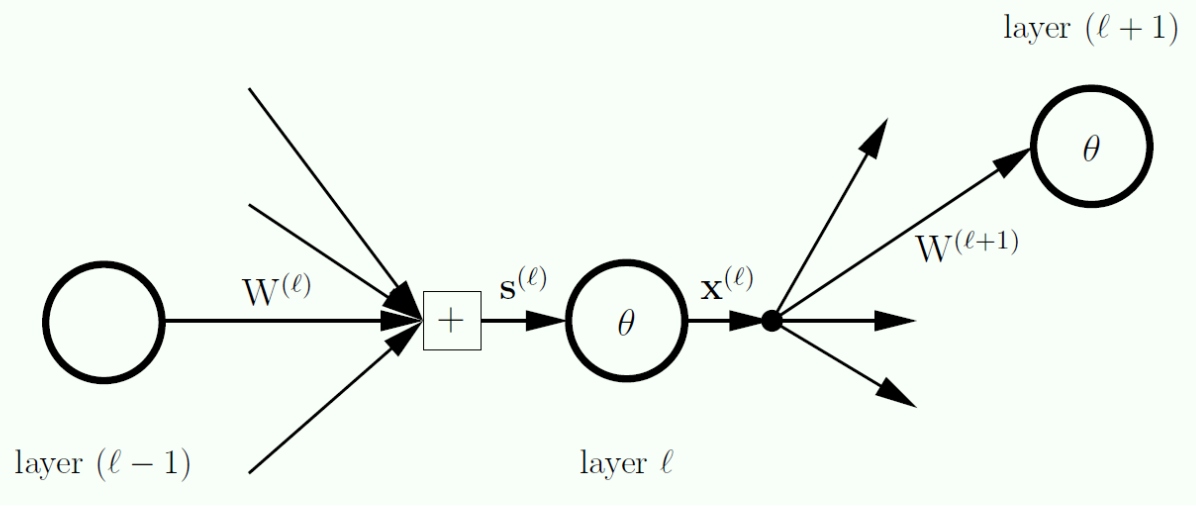

Zooming into a Hidden Node

layer

layers

layer

Notes:

- What are the operations to move between layers?

- For each layer we have a signal (

) go into a particular layer - For each layer we want to put the inputs for each unit into a vector, this vector is called

- Note that

does not consider the bias term

- Note that

- Summary: each layer has an input vector, and an output vector

- At the end we will build a matrix where each row is one column (one layer)

- What would be the size of this matrix?

- This will be on the exam!

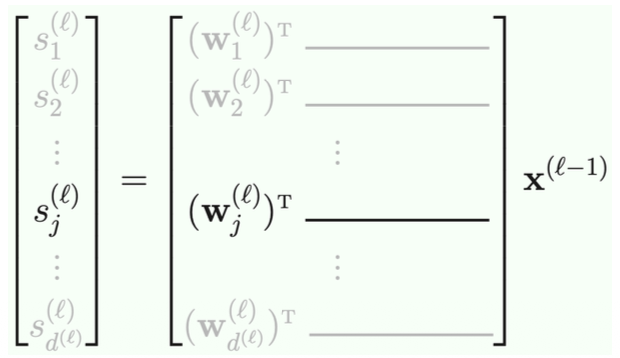

The Linear Signal

Input

The question:

- If I have the input for one layer, how do we calculate the output?

- What is the operation? This is

- We need to take a vector and apply

to every element (element-wise product with )

- We need to take a vector and apply

- What is the operation? This is

- Now, If I know the output values for this layer, how do we calculate the input values for the next layer?

- If you look at the graph, the input of every unit depends on the outputs of the previous layers, but how do we do this?

- What else do we need? -> the weights

- We need to take 1 column from the weights matrix.

- Remember the rows of the weights matrix is composed of the previous layer number of of units as rows and the next layer number of units as columns

- For example between the second (1,

, , ) and third layers (1, , ) in the example above we will have a 4 * 2 matrix - In order to multiply the weights times the

vector you need to do to account for the different number of rows and columns on each vector/matrix

- For example between the second (1,

- The question here is, given the input of a layer, how do we compute the input for the next layer?

- The operation is simple, you just apply

- Take that vector, apply

element-wise and add a 1 (the threshold) - Of course the output, by convention needs to be a column vector

- This is how you get to the form:

- The operation is simple, you just apply

- All of this will be in the exam

- Will ask for number of parameters and stuff

Forward Propagation: Computing

Forward propagation to compute

[Initialization]

- for

to do [Forward Propagation]

- end for

[Output]

Notes:

- You have x, where you want to make preditions

- Input layer is x(0), (there is no

in the input layer) - To compute the input to the next layer we apply

, then to get to the next layer you apply , and so on, you repeat this process. - There are 2 operations to reach a next layer

Minimizing Ein

/CSCE-421/Visual%20Aids/Pasted%20image%2020260211234601.png)

Using

Notes:

- Now this is about prediction.

- A backward propagation is to adjust weights after a training process and then you need to do forward propagation

- The cost of training the network using a simple example is twice the price of doing a prediction

- Given some samples, how do you train your network?

- We will write a loop and iteratively update each of the

weights.

Gradient Descent

Notes:

- Think about the

to be the collection of all weights. - The key here is still how to compute the derivative, then you plug in

and move in a negative direction. is pretty much empirical, and is pretty much this way for all training models, but the hard part is the gradient part.

Gradient of Ein

We need

Notes:

- To compute the gradient, pretty much you need to repeatedly use the chain rule (Computing this derivative is nothing but chain rule)

- Instead of going through all the details we will just review the results intuitively.

- Again, you need to know if we have a loss function Ein, you compute the loss function of every sample and then you do a summation of all of those

- If you can get the loss for a single sample, then you can just do summation of all the samples to get the total loss

- We just need to get the gradient of a single sample and then just do a summation.

Algorithmic Approach

sensitivity

Notes:

- We need to derive one more thing, called sensitivity.

- Above is the definition of sensitivity

- It is a vector, and there is a sensitivity for each layer

- It is the partial derivate of the error (just a number) in terms of the partial derivative of each element of

(the input vector into this layer)

- But what does sensitivity measure?

- Look at it element-wise

- Basically it measures how much the input affects the error (e)

- This is essentially the idea of a partial derivative, if you have a unit change of the input, how much the output would change?

- Think of getting the derivative of y = 2x in terms of y?

- It is just 2, this means if the input is increased by one unit, the output is increased by two units.

- If we have unit change in the input, how much will it change on e?

- If the sensitivity for a unit is 0, it will not affect e

/CSCE-421/Visual%20Aids/Pasted%20image%2020260211234850.png)

Notes:

is the connection between two layers - So now we want to know the partial derivative of the error in terms of

- Remember rows come from the previous layer (i), and columns come from the next layer (j)

- What we want to answer is: If this

is changed by a small amount, how much will change? - If we have unit change in each of the

, how much it changes the error?

- If we have unit change in each of the

- What do we need to know to compute this?

- Suppose you know the sensitivity of every layer

- What affects the derivative of

? - If the sensitivity for a unit is large, that means if we have a small change in one entry, the change in error is large, you do not need to consider anything downstream,

- The sensitivity basically tells you everything after this layer encoded into a single vector

- What else is important here?

- IF the output unit if (i) has a zero value, and the derivative measure if we have unit change with the connection

, what would happen here? - This output value is multiplied with

, then what would happen to j? There is no change, the derivative is 0.

- IF the output unit if (i) has a zero value, and the derivative measure if we have unit change with the connection

- The derivative of

is simply the output value of (i) times the sensitivity value of (j) - If you connect all of that into a matrix you get to:

- How can we obtain

? - Again we are talking about a single sample, because if we can do this for a single sample, we can do this for every sample

- Just use the [[#Forward Propagation Computing

]] formula, if you do forward propagation you get the for each layer.

- How can we obtain

- Question:

s are randomly initialized, what happens if we initialize to be all zeroes? what will happen? - In logistic regression you can do that because you only have one layer, but what would be the consequence here?

- This is an exam question, if you have a network of layers, is initializing

to be all zeroes a good idea? - It is not a good idea because if we do it (a zero matrix) in forward propagation, the values on the output will be all zero vectors, and with

, if the input is zero, the output will be zero, so the of the next layer will be all zeros.

- Takeaway: the derivate in terms of W is proportional to the sensitivity.

Computing

Multiple applications of the chain rule:

/CSCE-421/Visual%20Aids/Pasted%20image%2020260211235009.png)

Notes:

- To compute the sensitivity we use backward propagation, we start from the last layer, until we reach the first one.

- All we need to do is given a particular delta, how do we compute the delta from the previous layer

- Delta is a derivate, so we need to backward propagate this derivative, so we are propagating derivatives

- Easier case: Given delta (l), how do we calculate delta (l-1)?

- Basically you need to know the partial derivative to the output

- If we know the partial derivative of the output for this layer , how do we calculate the partial derivative of the input.

- Note you are not propagating the actual value, you are propagating the derivative

- You have to compute the derivative of

to get a function, and then you need to plug in to the value you got from forward propagation

- Why this is important?

- You can see that if you take the derivate of

the derivative will be close to 0. - If your input value to

is very large, your gradient will be close to 0.

- If your input value to

- You can see that if you take the derivate of

- The backward propagation in some units will depend on the forward propagation, that is why we first need to do forward propagation.

- Why is this non-linear function a curve gradient?

- When we talk about

we are talking about element-wise (each unit) - You compute the gradient of

, and the you plug in. - If

has a grater gradient in the output, the input will be close to 0.

- When we talk about

/CSCE-421/Visual%20Aids/Pasted%20image%2020260211235051.png)

Notes:

- How do we backward propagate the W matrix

- It requires two operations

- We need to first forward propagate

- Then we need forward propagation

- The weight (each line) needs to be multiplied with the sensitivity on each unit, then we'll sum them up together and get the derivative

- You are taking the ith row and taking the inner product with each sensitivity (each column). This is represented as a matrix multiplication

- This is because this product eventually becomes a column vector

- Multiply W matrix with the sensitivity vector, but note you are removing the first element.

- The 1 does not need to be backward propagated because there is no input for the "1" entry.

- Note that during prediction time you just need forward propagation, but in training you will need to do forward propagation first, then you need to do backward propagation

- The cost of doing forward propagation is twice the cost of doing backward propagation

- In forward propagation we do matrix multiplication

- In forward propagation we again multiply this W but elementwise?

- This is why training a network is much more expensive.

- The cost of doing forward propagation is twice the cost of doing backward propagation

The Backpropagation Algorithm

/CSCE-421/Visual%20Aids/Pasted%20image%2020260211235303.png)

Algorithm for Gradient Descent on Ein

/CSCE-421/Visual%20Aids/Pasted%20image%2020260211235351.png)

- This is the whole training process

- The key is the inner for loop, it will compute the gradient

- You do not need to implement this loop in HW2, you will just use pytorch function to do this.

- Can do batch version or sequential version (SGD).

Digits Data

/CSCE-421/Visual%20Aids/Pasted%20image%2020260211235448.png)